Effective Model to Measure Customer Experience

Alarming news keeps coming from the US retail front. The recent wave of store closure announcements from Gap, American Apparel, Kohl’s, Sears and Macy’s, which alone set to shut down about 15% of its current run in 2017, sent the industry reeling. Sure, we can point our fingers to digital commerce and today’s sweeping trend for bargain hunting. But the total US retail sales feel just fine at a steady 3% growth annually.

Upon a closer look, it’s evident that American buyers don’t spend less. They just choose to buy from some brands instead of others. The competition over a bigger share of wallet is heated, and companies increasingly rely on the secret sauce of customer experience as their new competitive advantage in the age of customer.

To manage customer experience is to know how to measure it. But how to reliably measure customer experience to check if everything is fine, and if not, to identify issues correctly? Naturally, brands go to customers themselves for this information as the starting point of their customer experience management (CXM) routine.

Surprisingly, it may be not the only place to look for this information.

Why customer satisfaction scores are not enough

Brands doing CX measurements rely largely on customer satisfaction surveys (CSS) of sorts, be it Net Promoter Score, CSAT, Customer Effort Score or custom. But based on our experience of consulting on customer experience management in B2C, voice of the customer programs provide just a fracture of the information required for effective CX measurement.

Looking at CX Index 2016 for US federal agencies, Forrester had to admit that over 80% of the time, customers’ surveyed opinions on what would make their experience better just didn’t apply to reality. As brilliantly put by Colin Shaw of Beyond Philosophy,

“What people do is much different than what people say they will do. People say they want the option of salad at Disneyland because “I’d like to eat healthier when I am at the park.” But then, when that same person smells a delicious and unhealthy churro, they ignore the salads Disneyland presents and buy the churro because “I’m on vacation, and I deserve a treat”… Asking questions doesn’t work. Why? Because the customers don’t know the answers.”

In essence, CSS stand for what customers think they want, which may present a rather selective, let alone subjective picture.

What customer satisfaction surveys are not:

- ongoing

- taking into account the seasonality of business (e.g., in travel & hospitality)

- precise enough to reveal the root cause of poor satisfaction in each particular case

- considering the irrationality of customers’ buying decisions driven by emotions rather than by careful deliberation

- driving innovation because of their reliance on customers’ experiences

- easily correlated to bottom line revenues

Do CSS still have the right to exist, you ask? Definitely, as they are still among the most affordable and resource-conscious ways to understand customers’ needs and wants first-hand, whatever the drawbacks. It can be a good starting point when there is a vision and a strategy to back it up. But how to round up such a strategy without relying on customers’ testimonies alone?

Down to discussing the case of US federal agencies, Forrester suggests prioritizing the drivers of customer experience and looking at what can really make a company’s mission successful. One of the answers to this call can be to couple CSS findings with the analysis of measurable factors that affect customer experience. This approach can give way to the alternative model of measuring customer experience that we discuss in detail below.

The alternative CX measurement model

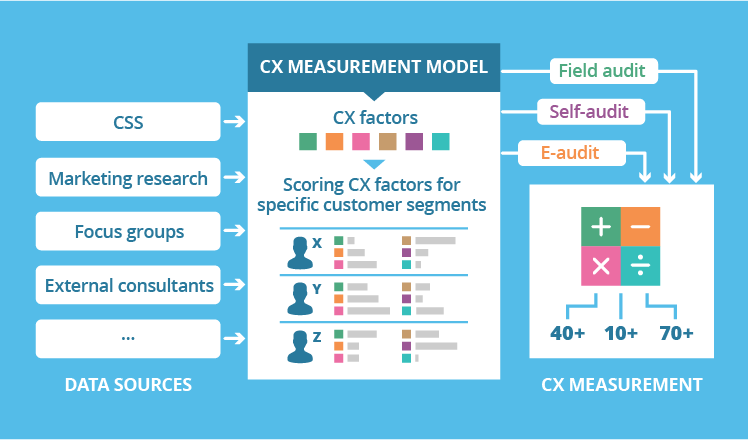

The logic behind our proposed CX measurement model implies that for every brand, there is a list of customer experience factors that are important for target audiences to keep buying and enjoying this brand’s products or services. Going through these factors and evaluating their actual impact on customer satisfaction would result in an effective CX measurement model that can be applied to specific customer segments and touchpoints over any timeframe without depending on CSS routine.

Now, on to more details.

Scoring CX factors and their impact

Beyond CSS findings, brands can go to other sources of information to come up with the CX-affecting factors that are relevant specifically to them. These sources include but are not limited to positioning statements that shape customers’ expectations, independent marketing research, focus groups, external consultants’ insights and the very brand’s CX team’s assumptions.

Drawing on more examples from retail, CX factors specific to traditional retailers, like department stores, may feature the following:

- Out-of-stock (OOS)

- Queue length

- Tidiness

- Customer service resolution time

- Speed of delivery, etc.

Further, relying on the insights from relevant findings from marketing studies, focus group interviews, hired consultants and / or an in-house CX team, brands can score each factor by the strength of its impact on a particular customer segment. For example, as queue length is identified as critical for lunch-break shoppers, queues that require 4 or more minutes of waiting can be scored 5 out of 5 for their highly negative impact specifically for this customer segment.

Once all the relevant factors are scored, the brand can apply this ready-made model to measure customer experience when and where their CXM strategy suggests it.

Measuring CX along the factor / impact model

With the brand-specific CX model in place, measurement itself relies on observation and auditing. This process can flow in 3 channels:

- Field audit by in-house or invited customer experience professionals visiting locations and registering data by criteria;

- Self-audit done by immediate employees in the position to collect CX data;

- E-audit by looking at the relevant data stored across enterprise systems such as CRM, ERP, product lifecycle management, accounting, etc.

For example, a grocery retailer can run a bi-monthly routine of measuring customer experience at its supermarkets in Massachusetts according to the own model of 20 relevant factors and their impact values. As a result, the retailer can quantify the customer experience delivered at different supermarkets, identify good-, neutral- and poor-performing CX factors and spearhead a targeted corrective action as the next step.

Say, if 13% of the supermarkets scored a 70+ point impact (higher scores means more negative impact) on weekend shoppers, the retailer would be able to correlate this measurement to financial returns from the same locations over the observed period. If the poor impact indeed brings losses, they can drill down to the factors with alarming scores (out-of-stock items, dense traffic between stalls, etc.) and step up to make a positive change.

But what if the same grocery retailer started surveying shoppers instead of conducting an independent audit? At the end of the day, getting a “somewhat satisfied” response to the question of “How satisfied were you with the speed of service / queuing time?” can’t clarify if queues were alright or just bearably long, and if it’s an alarming enough sign to take an action.

Supporting CXM decisions with reliable metrics

Customer experience managers have a hard time getting accurate and actionable CX measurements. Relying solely on subjective and sometimes contradictory responses to customer satisfaction surveys can lead to making the wrong assumptions, or be even confusing up to the point where brands just let these data lie idle.

As suggested by Kevin Leifer of ICC/Decision Services, useless customer metrics – including those misinterpreted – never lead to real results. It’s the right questions asked, the reliable answers sourced, and appropriate actions taken that do. In this light, the news on Macy’s massive store closures may sound a bit less dramatic knowing that the retailer sets out to focus on best-performing locations instead, with more vendors, seasoned talents and new technology onboard.