Predictive Analytics for Insurance

Architecture, Techs, Success Stories

ScienceSoft combines 36 years of experience in data analytics and AI with 13 years in insurance software development to design and implement powerful predictive analytics solutions for insurance.

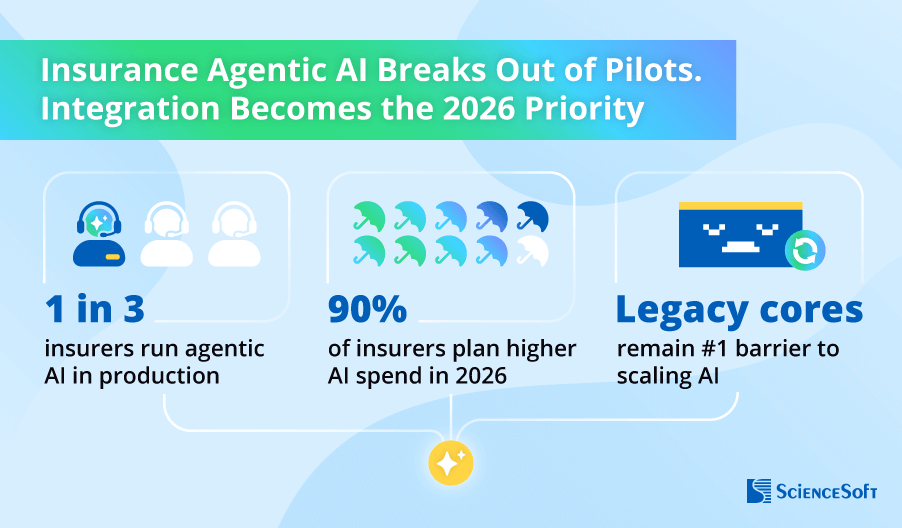

Insurance is Holding an Edge in Predictive Analytics Adoption

According to Accenture, 80% of insurers cite predictive analytics as one of the top game-changers for their business. 40% of large carriers already employ predictive analytics, and 67% plan to invest more in such technologies in the coming years.

A recent survey by BCG showed that insurance is outpacing nearly all other industries in adopting and piloting predictive systems, including generative AI systems. Driven by rapid advancements in AI, leading insurers are shifting from traditional predictive models toward more sophisticated, multi-step reasoning algorithms.

8 Key Use Cases for Predictive Analytics in Insurance

Insurance pricing

Insurance product optimization and promotion planning

Financial planning and analysis

Predictive Analytics in Insurance: How It Works

Insurance companies use intelligent predictions to identify complex dependencies and patterns in their business and customer data and precisely forecast particular future events, transactions, and performance metrics.

Predictive analytics for insurance help make optimal decisions across insurers' business processes, minimize financial and operational risks, identify opportunities for growth, and ensure high value of insurance services for customers.

Data science models used in predictive analytics for insurance

Statistical models

Process the available numerical data and offer trend-based calculations of future insurance metrics.

Best for: predicting stable quantitative insurance KPIs.

Price: $$

Non-neural network (non-NN) machine learning models

Process multi-dimensional structured insurance data, predict a wide range of insurance variables (e.g., risk, demand, revenue, expenses) based on the analysis of diverse factors that impact each particular variable.

Best for: batch predictive analytics.

Price: $$$$

Deep neural network (DNN) models

Deal with massive amounts of structured and raw insurance data. DNN models automatically determine change factors for the required insurance variables and deliver accurate predictions based on the identification and analysis of complex non-linear dependencies between these factors.

Best for: real-time predictive analytics.

Price: $$$$$

As the cloud and AI technologies are becoming more accessible and affordable, DNN-based predictive analytics solutions are being adopted more and more widely in the insurance industry. This lets the insurers make the most out of the available data and get near real-time, highly precise forecasts with minimal manual efforts.

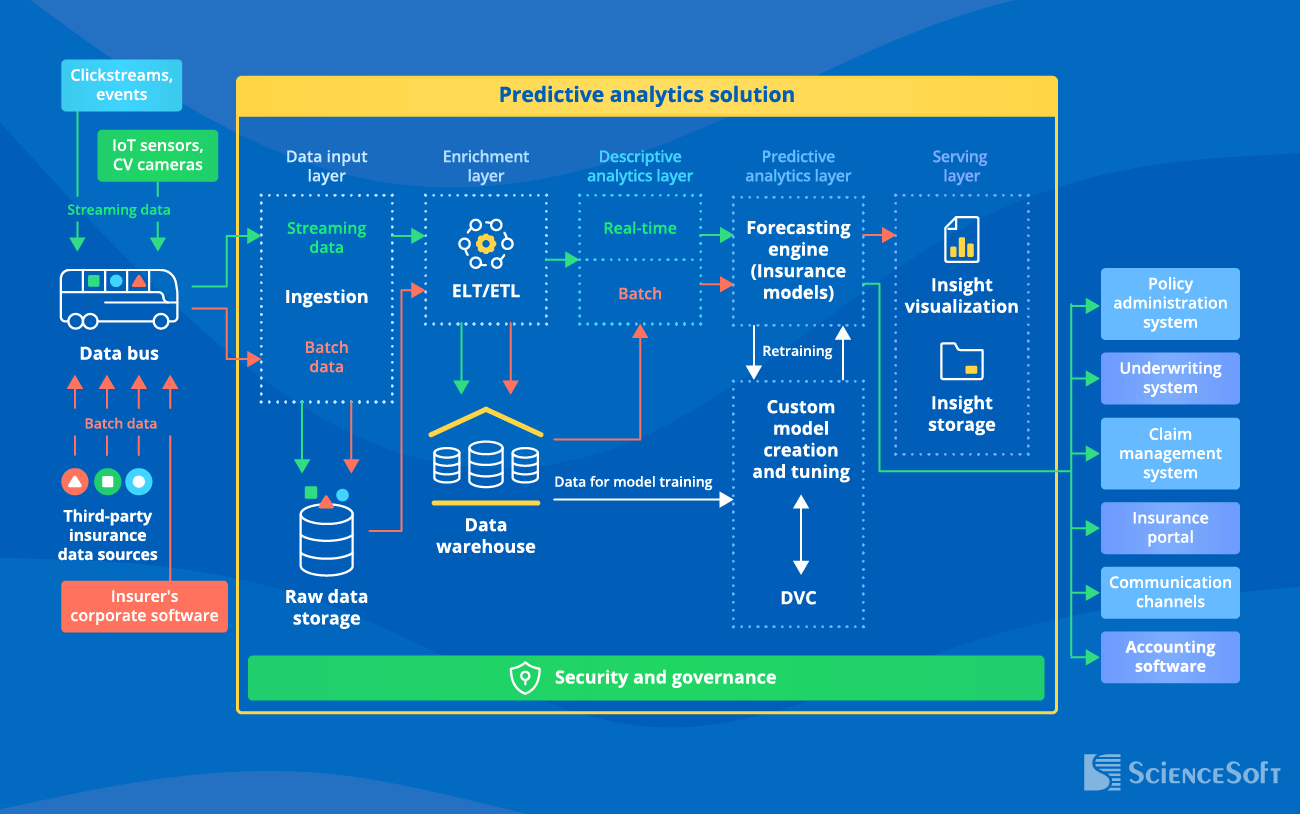

Architecture of a Predictive Analytics Solution for Insurance

ScienceSoft’s experts consider the DNN approach the most effective for solving insurance forecasting tasks. Below, we describe the reference architecture our team typically employs to create powerful predictive analytics solutions for insurance.

Such an architecture can be flexibly extended with LLM-based agents to enhance the system’s predictive capabilities, automate analytics enforcement, and instantly apply intelligent forecasts for autonomous insurance decisioning.

The essence: Real-time and batch insurance data from the available sources are processed and analyzed in two separate flows. A pre-trained DNN automatically produces highly accurate forecasts on the required insurance variables. The received predictions then get stored and visualized to be used by the insurance teams for operational and strategic planning. Real-time insights are sent directly to the relevant insurance systems to instantly trigger certain events, e.g., notifications about the insured assets’ pre-failure conditions, alerts on fraudulent transactions, dynamic premium changes, or claim payouts.

With the proposed architecture, you get:

- Fast development due to the ability to build various layers simultaneously.

- Optimized fees for the cloud (PaaS and IaaS) services due to the separate analysis of batch and stream insurance data.

- Enhanced safety of insurance data and its easy recovery in case of system issues due to having dedicated storages for raw data, enriched data, and analytical results.

- Flexibility to independently upgrade and scale each layer when needed.

- Minimal human participation in model management due to continuous model self-training and no need for manual cleansing and structuring of input data.

Strong data governance and security are critical for safe and uninterrupted insurance data processing. At ScienceSoft, we help our clients build scalable, analytics-driven data management frameworks that make validation and correction seamless. On top of that, we implement infrastructure security tools like SIEM, DLP, firewalls, IDS/IPS, and DDoS protection to safeguard sensitive data and the broader IT environment.

A Tech Stack to Implement Predictive Analytics for Insurance

ScienceSoft's teams typically rely on the following technologies and tools to design and launch predictive analytics solutions:

Data lake

Machine learning frameworks and libraries

Frameworks

Libraries

Machine learning platforms and services

Data governance

Security mechanisms we work with

- Data protection: DLP (data leak protection), data discovery and classification, data backup and recovery, data encryption.

- Endpoint protection: antivirus/antimalware, EDR (endpoint detection and response), EPP (an endpoint protection platform).

- Access control: IAM (identity and access management), password management, multi-factor authentication.

- Application security: WAF (web application firewall), SAST, DAST, IAST (security testing).

- Network security: DDoS protection, IDS/IPS, SIEM, XDR, SOAR, email filtering, SWG/web filtering, VPN, network vulnerability scanning.

In predictive analytics projects, the dependence is clear: the less insurer involvement is needed to operate an analytics solution, the more manual effort is required at the development stage. Building software that handles unique analytical operations and provides stable integrations is impossible without custom coding. Plus, implementing tailored ML-based analytics involves manual design, training, and fine-tuning of analytical models.

Selected Success Stories From the Industry

Predictive Analytics to Innovate Transportation Insurance

Use case: Protective Insurance, a large US-based transportation insurer with over 90 years of expertise in the field, implemented predictive analytics to prevent claim cases. The Azure-based predictive analytics system enables real-time collection and processing of telematics data from the insured trucks. It relies on ML-powered analytical models to accurately predict potentially dangerous driving conditions and instantly notify drivers of the proper safety measures. Plus, with the powerful business intelligence capabilities, claim professionals get the previously unwieldy data sets elegantly displayed, which makes the process of reviewing potential claims more efficient.

Key techs: Azure Synapse Analytics, Azure Databricks (AI), Azure Data Factory, Azure Data Lake Storage, Power BI

Next-Gen Predictive Analytics for Commercial Insurance

Use case: Insurity, the US leading insurance analytics provider since 1985, launched a secure predictive analytics platform to help its clients from the commercial insurance domain improve their risk management, pricing, and policyholder servicing operations. The solution instantly analyzes customers’ data and introduces data-driven forecasts on the probability of loss. The obtained insights help promptly set optimal risk-based insurance prices and make accurate underwriting decisions. Also, the insurers receive ML-based predictions on the insurance portfolio health metrics. It helps them promptly take the appropriate risk mitigation steps and improve portfolio profitability.

Key techs: Snowflake, Amazon EBS, Amazon ECR, Amazon ECS, Amazon EC2, Amazon S3, Amazon GuardDuty, AWS CloudTrail, Amazon Machine Images, Amazon RDS for PostgreSQL, Amazon CloudWatch, AppDynamics, Rapid7, Splunk.

ScienceSoft: We’ve Been Here Since the Dawn of AI Technology

- Since 2012 in insurance software development.

- Since 1989 in data analytics and artificial intelligence.

- Since 2005 in business intelligence and data warehousing.

- Since 2013 in big data.

Our awards, certifications, and partnerships

How ScienceSoft Can Help On Your Predictive Analytics Journey

ScienceSoft designs and builds robust predictive analytics solutions that help insurers drive value from the ever-growing volumes of data coming from disparate sources, including corporate apps, third-party systems, asset tracking solutions, regulatory and risk databases.

Our Clients Say

Partnering with ScienceSoft for our software maintenance and evolution initiative has been an excellent experience. They identified and fixed several longstanding issues that had been causing us persistent difficulties. Special mention goes to the redesign of our reporting module, which increased reporting speed by 30 times.

Ted Frost, Managing Director at Frost Insurances

It's High Time to Use Predictive Analytics Solutions for Insurance

|

|

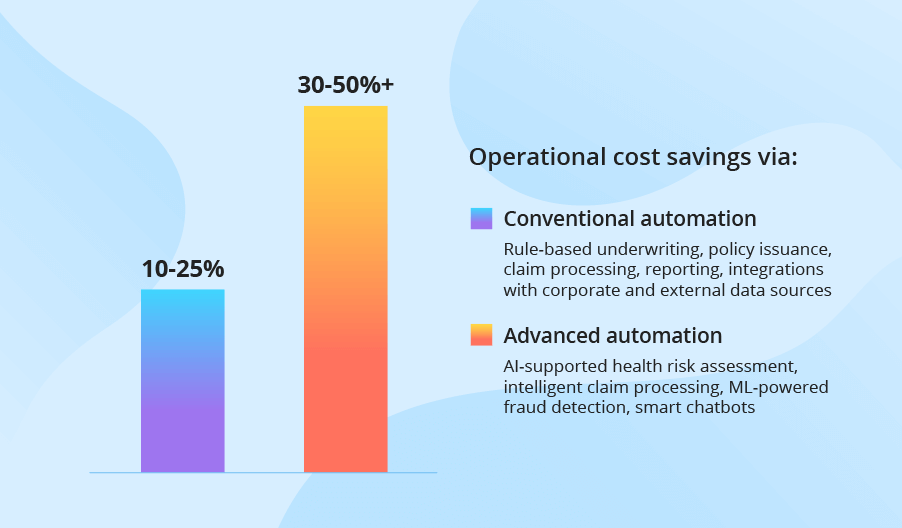

They improve business efficiencyWith predictive analytics, insurers can achieve up to 10% improvement in loss ratio, 5% decrease in claims costs, and 3x revenue growth, compared to the industry average. According to a recent ROI study, 25 insurers that employed predictive analytics realized around $400 million of incremental profit over five years. |

|

|

They speed up the insurance processesWith the help of predictive analytics, the underwriting cycle can be accelerated by 25–40% and the time for insurance claim settlement can be reduced to just 3 seconds. The ability to provide fast and accurate insurance services leads to the increased customer satisfaction and retention. |

|

|

They drive innovationsBy employing predictive analytics solutions to capture and analyze IoT big data, insurers can leverage new and more effective business models, such as usage-based car insurance, parametric insurance, pay-as-you-live life and health insurance, and more. |

|

|

Predictive analytics market witnesses continuous growthIt is projected to increase from $22.22 billion in 2025 to $91.92 billion by 2032 at a CAGR of 22.5%. |