Stanford University suggests using CNNs for automated skin cancer diagnostics

According to the American Academy of Dermatology (AAD), skin cancer is the most common human malignancy. AAD also estimates that 212,200 new cases of melanoma (107,240 noninvasive and 104,960 invasive) will be diagnosed in the US in 2025.

Usually, skin cancer is pre-diagnosed visually, beginning with an initial clinical screening. Then it can be followed by dermoscopic analysis, a biopsy, and histopathological examination.

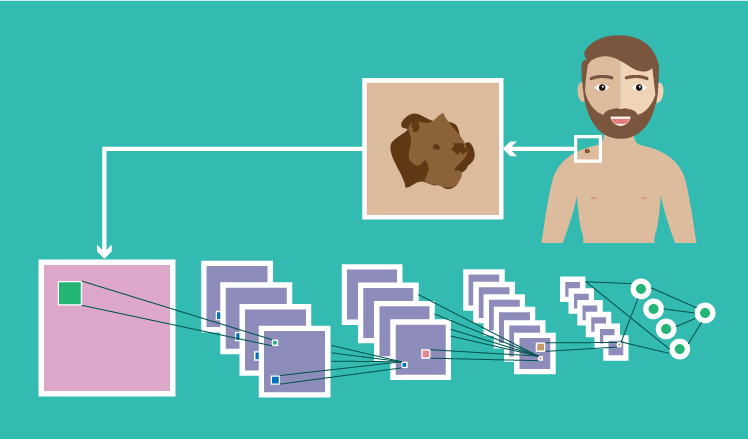

To assist healthcare providers in clinical screening, Stanford University‘s researchers came out with a medical image analysis study, featured in the Nature journal. The study introduces the possibility of automating the classification of skin lesions by using deep convolutional neural networks (CNNs).

The study in brief

The researchers have taken a single CNN trained end-to-end with a large image dataset, using pixels and disease labels as inputs. The dataset of 129,450 clinical images contained 2,032 diseases and was organized based on visual and clinical similarity of diseases.

The CNN was to get the image and analyze the malignancy probability (%). To ensure accurate classification, researchers introduced 757 clinical classes. For example, malignant classes included:

- Amelanotic melanoma

- Lentigo melanoma

- Acral-lentiginous melanoma, etc.

Benign classes comprised of:

- Blue nevus

- Halo nevus

- Mongolian spot, etc.

As the CNN processed a skin lesion image and assessed it according to each clinical class, it gave the output, for example, 92% malignant melanocytic lesion and 8% benign melanocytic lesion.

As other examples of using CNNs in medical image analysis show, applying the software powered by neural networks usually results in a high accuracy, specificity, and sensitivity in lesion classification. This case wasn’t an exception.

Results

The CNN’s performance was matched with 21 board-certified dermatologists on biopsy-proven clinical images with two use cases: keratinocyte carcinomas versus benign seborrheic keratoses, and malignant melanomas versus benign nevi. The CNN’s performance turned out to be equal to the results from all the participating experts across both tasks, showing that artificial intelligence is able to classify skin cancer as competently as dermatologists.

A possible adoption scenario

As healthcare providers need to be wherever their patients are, it sometimes requires them to be outside the clinic. The Stanford researchers assume that bringing CNN-based software for skin lesion classification to mobile devices can assist caregivers in providing patients with low-cost universal access to vital diagnostic care.

The outtake

One more example of a successful CNN application for automated cancer diagnostics is an important reminder that medical image analysis grows as a trend and, hopefully, gradually develops into the healthcare industry standard. While currently the use of neural networks for diagnostic support is advancing in research and trials, this technology can also be adapted for clinical use, where it can bring extraordinary value by allowing for timely diagnosis and treatment.