Trick or Treat: Using CNNs in medical image analysis for automated diagnostics

Convolutional neural networks (CNNs), deep learning algorithms, are widely used in pattern and image recognition. Insensitivity to noise and the abilities to work with large input volumes and automatically extract features make CNN a promising candidate to handle medical image analysis, yet neural networks are mostly designed by trial and error. Accordingly, researchers, innovators and providers might question adopting CNNs for diagnosing, treating and monitoring patients.

To deal with uncertainty, we've reviewed the tried-and-true application areas of convolutional neural networks in medical image analysis.

Diagnostics of diabetic retinopathy

Patients with diabetes risk to develop multiple severe complications as the condition progresses, one of them being diabetic retinopathy (DR). Retinopathy leads to visual loss and blindness if not detected at early stage. Regular eye examinations are the cornerstone of DR prevention and early detection.

Though OCT and wide-field imaging modalities are considered to offer improved diabetic retinopathy (DR) screening performance, most of the current programs employ 1- or 2-field retinal color fundus imaging due to its cost-effectiveness.

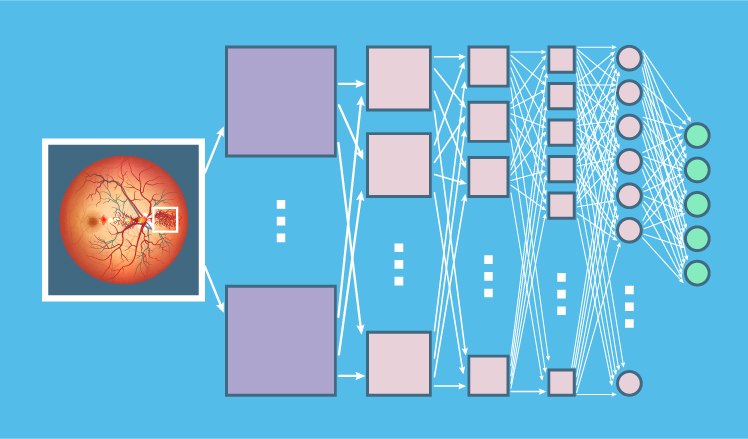

With this in mind, researchers proposed using a neural network with CNN architecture and data augmentation for diagnosing retinopathy and classifying its severity based on fundus images. Such a network should be able to identify micro-aneurysms, exudate and hemorrhages on the retina and automatically come up with a diagnosis.

CNN training & testing

The dataset used for CNN testing contained over 80,000 images from patients of various ethnicity and age, with extremely different levels of lighting in the fundus photographs. These differences affected pixel intensity values within the images and could hinder correct classification. Therefore, researchers applied color normalization to the fundus images.

The CNN with multiple convolutional layers was first pre-trained with 10,290 images to achieve a relatively quick classification level without wasting a substantial training time. About 350 hours of training later, the model's accuracy was taken to over 60%. Then the CNN was trained on the full dataset, and its accuracy reached over 70%. The researchers also composed a validation set of 5,000 images. The CNN processed this set in 188 seconds.

Results

The researchers defined specificity as the share of patients correctly identified as not having retinopathy and sensitivity as the share of patients correctly identified as having retinopathy. Accuracy, in their case, was presented by the amount of correctly classified patients. The classification included 5 levels:

- No retinopathy

- Mild DR

- Moderate DR

- Severe DR

- Proliferative DR

The final trained CNN resulted in 95% specificity, 30% sensitivity and 75% accuracy on 5,000 validation images. Researchers claim that a higher specificity is achieved at the expense of sensitivity.

Cancer diagnostics

Enabling early cancer diagnostics is a critically important task for healthcare, as the tumor detection timeframe is tightly bound to survival rates. CNNs are widely used to localize various tumors, such as brain gliomas, lung adenocarcinomas, breast DCISs and more. In this overview, we concentrate on breast tumors and pulmonary nodules (malignant and benign).

Detecting breast tumors

According to CDC, breast cancer is the second leading cause of cancer deaths among women. Moreover, of 12.1 million mammograms performed annually, 50% yield false positives, which results in the wrong diagnosis and about 20% of unnecessary biopsies, American Cancer Society (ACS) reports.

CNN training & testing

To address false positives, unnecessary tests and a high mortality, many efforts are put into improving breast cancer diagnostics and treatment. As a mammogram is a primary procedure to detect lesions, medical researchers offer various methods of analyzing mammograms automatically to support health specialists in their decisions.

One of such methods was implemented in 3 steps:

- Image augmentation (cropping, rotation and resizing of the original mammogram)

- Feature extraction via a trained CNN

- Training of a support vector machine (SVM) to perform the classification task (distinguishing between patients without tumor, and defining if it's benign or malignant) with the extracted features

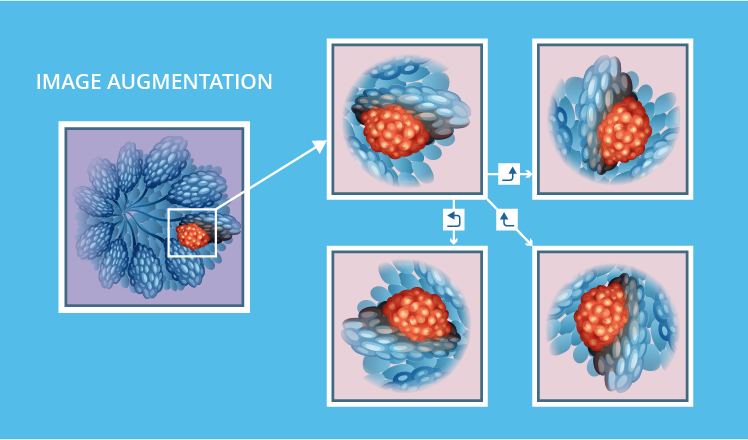

Image augmentation

This step was necessary to properly train a CNN. The initial mammographic dataset at researchers' disposal included 322 images with 51 corresponding to malignant tumors. First, researchers wanted to get rid of excessive black zones in the borders that could hinder classification. They created an algorithm to automatically crop images and thus increase analytical accuracy. Moreover, every mammogram was rotated -90o, 90o and 180o. This allowed expanding the dataset to up to 600 images.

Feature extraction

At the next step, the CNN with multiple convolutional layers was pre-trained with the expanded dataset. Activations of the last convolutional layer were selected as the source of main features for further classification. In total, 4096 features were extracted for each image.

Classification

To train the system for classification and test its accuracy, researchers divided the dataset into 2 groups. The training set was composed of 360 mammograms, while the testing set included 240 images.

Then, each of the 360 training images was given as the input for the CNN, and the features obtained at this step became the inputs for the support vector machine. Next, the classification accuracy was evaluated via the test set of 240 mammograms, with the same process of feature extraction for every image.

Results

The peak mammogram analysis accuracy achieved was 64.52%. Researchers note that to increase the accuracy, the following alterations are needed:

- Extracting features from multiple CNN layers, instead of sourcing only from the last one

- Include a feature selection phase to preselect the most fitting features extracted by the CNN for better classification

- Testing other classifier approaches, such as fuzzy inference systems or clustering techniques

It can be also noted that higher accuracy may be further achieved with larger datasets and additional augmentation methods, such as quality improvement.

Detecting pulmonary nodules

ACS states that lung cancer is the leading cause of cancer deaths among men and women, with the 1 to 4 cancer death rate. One of the effective procedures reducing lung cancer mortality is regular CT screening (according to National Lung Screening Trial). It allows to detect pulmonary nodules and then monitor their growth, as small nodules are mostly benign. In the case of malignancy, early detection allows winning higher survival chances.

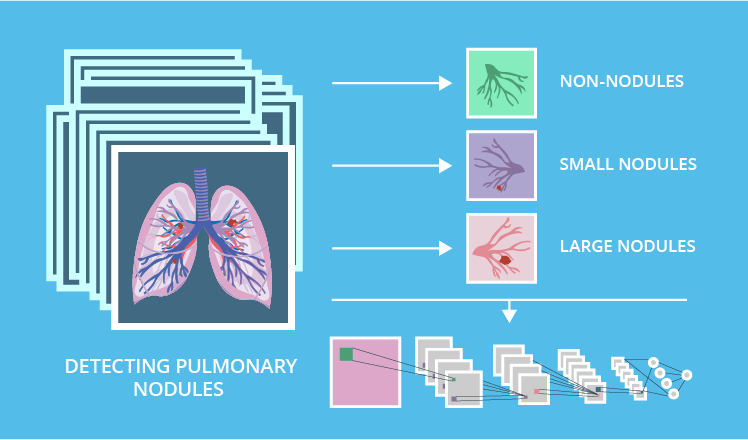

However, CT scan interpretation is a tedious and consumptive work, and the human brain isn't impeccable. To reduce the chance of false negatives, researchers came up with the concept of a system for computer-aided detection (CADe) of lung cancer, based on CNNs. Within the CADe system, the team of specialists outlined 3 separate modules: localization of ROIs (region of interest), classification of nodules and classification of malignancy.

The overview below presents a model for classification of nodules via CNNs.

CNN training & testing

The initial image dataset included 1,000+ CT scans, with each annotated by 4 radiologists. According to their inputs, 3 object types were identified on images: non-nodules, small nodules (less than 3mm in diameter) and large nodules (3mm and more).

To enable a higher CNN training accuracy, researchers adjusted the dataset in line with the annotation process the nodules were included in the set only if they were identified unanimously by each of the radiologists. The resulting dataset totaled in 368 small nodules and 564 large ones.

The whole batch of 932 nodules was split into train and test datasets (80% and 20%, respectively). However, CNNs typically perform better with larger datasets, thus researchers massively enlarged the training range. They extracted a 36x36x36 voxel cube from 4 corresponding CT scans for each nodule. Then, 48 unique perspectives of each nodule were extracted from every voxel cube.

This technique increased the number of the training items from 746 to 35,808. Then the researchers applied random cropping of 36x36 image slices (for each slice in a sheet) into 34x34 image slices, which increased the dataset size to 465,504 items.

Results

This model aimed at accurately classifying an object within the region of interest, determining whether it is a large nodule or any of benign objects.

The researchers tested out 4 variations of convolution layers within the CNN: 3, 4, 5 and 6 layers. For the classification task, all the alternatives resulted in accuracy higher than 81%. The peak accuracy identified in variations differed only by 1.02%.

The CNN with 5 convolution layers was recognized as the best performing architecture, with 82.1% accuracy, 78.2% sensitivity and 86.1% specificity.

Diagnostics of multiple sclerosis

In multiple sclerosis (MS), each patient's struggle is unique. Depending on what part of the central nervous system is affected, MS can cause coordination, movement, vision, sensation as well as bladder and bowel control problems. Various symptoms not only lower the life quality, they also hinder diagnostics, and only an MRI scan is able to define if a patient is suffering from multiple sclerosis or not.

MRI is considered the most effective method for diagnosing and monitoring MS progress due to its sensitivity to the central lesions they are especially visible on T2 MRI sequence.

To improve MS diagnostics, researchers offered an approach that harnesses CNNs and multilayer perception neural networks (MLPs). This approach includes processing MRI images to detect MS-caused lesions.

CNN training & testing

The dataset used by researchers consisted of 153 MRI images: 72 of patients without MS lesions, and 81 images with MS or suggestive MS lesions. To allow for higher analytical accuracy, all images were pre-processed. Researchers removed artifacts (gradients, overlapping intensities, noise, etc.) and surrounding tissue, as well as resized the slices.

After the pre-processing stage, the CNN with 4 convolution layers was trained with the dataset and extracted image features, in this case, objects of potential sclerosis. These features were fed into the MLP classifier so that it would indicate whether the MRI scan is normal or contains an MS lesion. The CNN has extracted 120 features, which were grouped into 2 categories:

- Normal

- MS or suggestive MS

It is noteworthy that in this study the CNN didn't use any segmentation.

Results

The researchers defined 4 measures to evaluate the model's performance:

- True positive(TP): the classification result is positive and there is a lesion

- True negative(TN): the classification result is negative and there's no lesion

- False positive(FN): the classification result is positive and there's no lesion

- False negative(FP): the classification result is negative and there is a lesion

Within these measures:

- Sensitivity was calculated as TP / (TP+FN)

- Specificity was calculated as TN / (TN+FP)

- Accuracy was calculated as (TP+TN) / (TP+TN+FP+FN)

Accordingly, the combination of CNN and MLP resulted in 97.6% specificity, 96.1% sensitivity and 92.9% accuracy.

Diagnostics of infectious diseases

Detecting pathogens of malaria in thick blood smears and tuberculosis in sputum samples

Malaria is a mosquito-borne disease caused by parasites of the genus plasmodium, which can be detected via microscopical examination of stained blood smear samples. The diagnosis can be confirmed based on the size and shape of various parasite stages and the presence of stippling (i.e. bright red dots).

Pulmonary tuberculosis (TB) is one of the deadliest infectious diseases worldwide. Smear microscopy of sputum was chosen to diagnose patients in this research. For the TB-positive result, the sputum sample has to indicate the presence of mycobacteria, shown as red / pink rods or bacilli against the blue background.

CNN training & testing

Prior to training CNNs for automated malaria / TB analysis, lab technicians manually provided their input on objects of interest in every image - 7245 objects in 1182 images for malaria and 3734 objects in 928 images for tuberculosis.

Then, each image collected was downsampled and split into overlapping patches. The 'positive' patches containing the plasmodium parasite or bacilli, were taken centered on bounding boxes in the annotation. 'Negative' ones without any of the pathogens, though possibly containing staining artifacts, blood cells or impurities, were taken from random locations in each image not intersecting with any annotated bounding boxes.

The trained CNN's algorithm with 2 convolution layers could classify each patch as either containing an object of interest or not. The networks were applied to the respective test sets: 261,345 test patches (11.3% positive) for plasmodium detection, and for 315,142 test patches (9.0% positive) for tuberculosis mycobacteria.

Results

As a result, the malaria detection task was performed by CNNs with a higher accuracy than the TB one, likely due to processing a larger training set. Researchers didn't mention any specific numbers, yet claimed that their results are in line with another study on identifying malaria parasites in images (they got 78% precision in finding 90% of the parasites that technicians have found).

It is noteworthy that the algorithm's object detecting performance is close to lab technicians' performance and in some cases exceeds it. Therefore, using CNNs for detecting malaria and TB pathogens can accelerate diagnostics and ensure timely treatment.

Pitfalls in using CNNs for automated image analysis

While convolutional neural networks are well known for their high performance in classification, their capabilities aren't limitless. We've encountered a few issues that may hinder adoption of CNNs for certain clinical image analysis tasks:

- Most medical images have a poorer signal-to-noise ratio than images taken with a digital camera. This can make the contrast between anatomically distinct structures (e.g. lesion, edema, healthy tissue) too low to be computed reliably. If images weren't pre-processed to remove artifacts and reduce noise, CNNs may not be able to reach accurate results.

- Next, there is always a possibility to over-train a convolutional neural network, which is a common pitfall for all neural networks. If the network was trained on the same dataset for too long, it can over-fit training images. That is why a validation dataset is used by many researchers to track a CNN's performance and stop training when it starts decreasing (which is the sign of overfitting).

- CNNs (as well as any neural network) work in a black-box way, not really offering explanations of their conclusions. With any input, a corresponding output can be produced, but it may be hard to explicate the decision and its reliability, which is certainly problematic for medical image processing. Some researchers claim that more comprehensive and rational explanations can be provided by combining neural networks with fuzzy logic by using CNNs to process fuzzy information.

Being aware of such pitfalls, researchers can harness additional algorithms to preprocess a dataset, explicate diagnostic outputs and find their peak accuracy avoiding overtraining. Therefore, specialists can succeed even better in creating systems for automated diagnostics based on CNNs.

CNNs for diagnostics: Final word

In this overview, we collected 5 separate use cases, which all prove CNNs' high performance in classification and functional approximation. Let us sum up the performance achieved in every case:

- The study on identifying diabetic retinopathy. The system used multiple convolutional layers and resulted in 95% specificity, 30% sensitivity and 75% accuracy.

- The study on localizing breast tumors. The algorithm reached 64.52% accuracy, the authors left the number of convolutional layers unknown.

- The work on detecting pulmonary nodules. The researchers tested a few variations of convolutional layer quantities and the best result was 5 layers with 82.1% accuracy, 78.2% sensitivity and 86.1% specificity.

- The system for diagnosing multiple sclerosis involved 4 convolutional layers and resulted in 97.6% specificity, 96.1% sensitivity and 92.9% accuracy.

- The research on infectious diseases. The algorithm included 2 convolutional layers and performed approximately with 78% precision.

Accordingly, the lowest accuracy reached was 64.52%, and the highest 92.9%. Therefore, the average performance of CNN-based systems was 78.5%.

Researchers do find a great potential in neural networks for addressing such problems as diagnosing infectious diseases, different forms of cancer, ophthalmologic complications of diabetes and chronic neurological disorders.

The current results that studies have shown are promising, yet they seem to be a bit far from a real-life clinical application. Most CNNs presented are accurately performing one or two tasks that later should be used as an input for different systems, which will draw a conclusion and output the diagnosis. As we've seen, some researchers do plan to expand their studies further and create an end-to-end diagnostic system.

References:

http://www.sciencedirect.com/science/article/pii/S1877050916311929

https://arxiv.org/pdf/1608.02989.pdf

http://www1.eafit.edu.co/asr/courses/research-practises-me/2016-1/students/final-reports/c_paper_gallegoposada_montoyazapata.pdf

http://gkmc.utah.edu/winter2016/sites/default/files/webform/abstracts/WCBI2016_Paper.pdf

http://www.aensiweb.com/old/GJMPR/2012/50-54.pdf