Real-Time Data Processing

Architecture and Toolset

In data analytics since 1989, ScienceSoft helps companies across 30+ industries implement scalable and high-performing real-time data processing solutions.

Real-Time Data as a Revenue Booster

According to the 2025 Data Streaming Report by Confluent, 44% of organizations achieve a 500% return on investment from implementing real-time data streaming and analytics. The survey gathered insights from 4,175 IT leaders across key industries such as manufacturing, financial services, telecommunications, and technology.

The surveyed executives report that switching to real-time data processing contributed to:

- More successful development of new products and services.

- Improved customer experience.

- Reduced operational costs.

Types of Real-Time Processing Architectures

Lambda and Kappa architecture types are the most efficient for scalable, fault-tolerant real-time processing systems. The optimal choice between the two depends on the specifics of every use case, including the approach to coupling real-time and batch processing.

Check the difference between real-time and batch processing

Real-time data processing is a method that enables dynamic input of constantly changing data and near-instant operational response and/or smart analytical output (e.g., responses to user requests, automated action triggers, personalized recommendations).

Batch processing is a method of data processing that allows the system to handle non-time-sensitive data (e.g., revenue reports, billing data, customer orders) according to established intervals (every hour, every week) and enables historical data analytics.

Hide

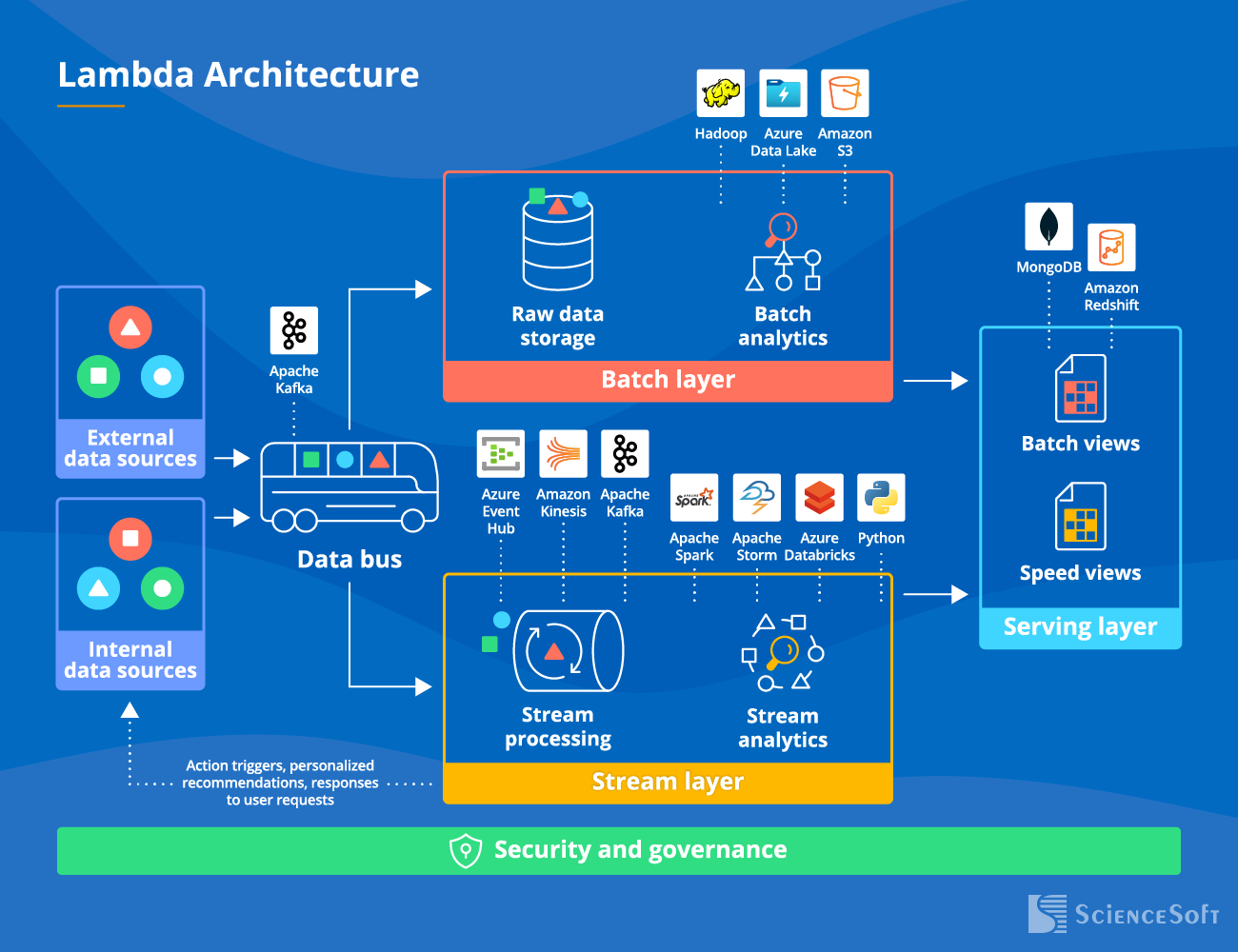

Lambda architecture

The Lambda architecture has separate real-time and batch processing pipelines: they are built upon different tech stacks and run independently. The serving layer (a distributed database or a NoSQL database) is built on top of the two layers. It combines batch and near real-time data views from the corresponding pipelines to provide real-time analytics insights for BI dashboards and enable ad hoc data exploration.

|

|

|

|

|

|

Best for: scenarios where real-time processing must be coupled with cost-efficient storage and analytics of large historical data sets (petabyte-range). For instance, in an ecommerce app, real-time analytics is crucial for immediate responses like confirming a payment or sending a personalized cross-selling recommendation. At the same time, batch analytics helps identify shopping patterns based on customer behavior data. |

|

|

|

|

|

Lambda pros

- High fault tolerance: if the streaming layer fails, the batch layer still has the data.

- All historical data is stored in a data lake, enabling efficient batch processing and complex analytics.

- More effective training of machine learning models thanks to the access to complete historical data sets.

Lambda cons

- Comparatively longer and more expensive development due to architectural complexity.

- More challenging to test and maintain.

- Extra efforts may be needed to achieve data consistency between real-time and batch views.

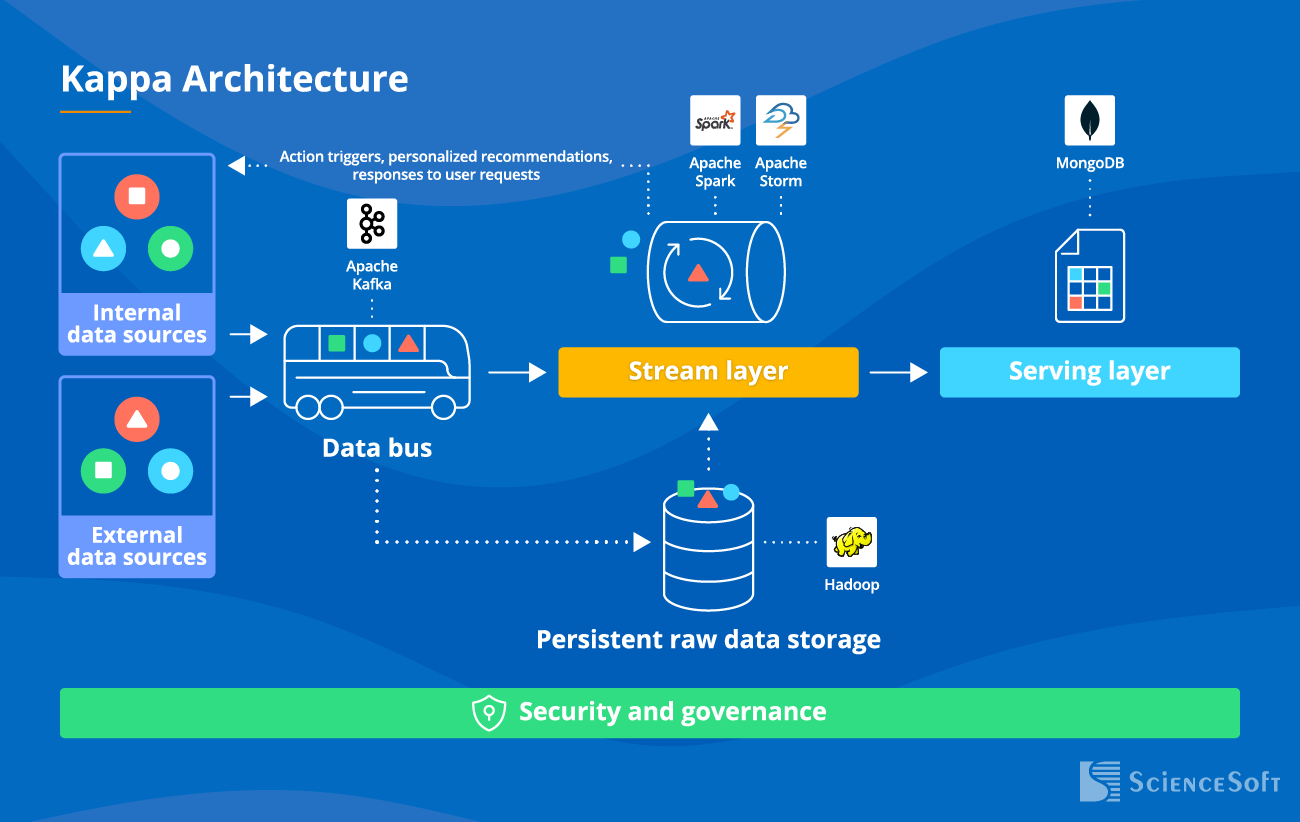

Kappa architecture

Kappa architecture has just one stream layer supporting real-time and batch processing. Consequently, both processes utilize the same tech stack. The serving component receives a unified view of real-time and batch analytics results.

|

|

|

|

|

|

Best for: scenarios when the focus is on low-latency responses, and historical analytics is complimentary, e.g., for video streaming, sensor data processing, or fraud detection. |

|

|

|

|

|

Kappa pros

- May be cheaper and faster to implement thanks to a simpler architecture where both processing types run on the same tech stack.

- Lower testing and maintenance costs.

- Easy to scale and expand with new functionality.

Kappa cons

- Lower fault tolerance compared to Lambda.

- Limited access to historical data and its comprehensive analytics, including ML training.

The Advantages of Real-Time Processing Shouldn't Come at a Price

Despite its obvious benefits, real-time data processing can also introduce new risks. Chasing higher processing speed, big data developers often make compromises, and data security and accuracy are the first to take the bullet. Another frequent victim is scalability: it doesn’t matter how quickly your software is churning data if it is accumulating technical debt equally as fast. Managing these risks to achieve high speed without compromising quality is what really makes or breaks real-time big data projects. And there’s no universal solution: to build a future-proof system, you’ll need an architecture tailored to the business specifics and a fair share of custom code.

Why Entrust Your Real-Time Data Processing Solution to ScienceSoft?

- Since 1989 in custom software development and data analytics.

- Since 2003 in end-to-end big data services.

- Hands-on experience in 30+ industries, including healthcare, banking, lending, investment, insurance, retail, ecommerce, professional services, manufacturing, transportation and logistics, energy, telecoms, and more.

- ISO 9001 and ISO 27001-certified quality management and security management systems to guarantee top service quality and complete protection of our clients' data

Our awards, certifications, and partnerships

Build a Robust Real-Time Solution With Experts

With an in-house PMO and established project management practices, ScienceSoft's priority is to drive your project to its goals regardless of time and budget constraints, as well as changing requirements.

Real-Time Data Processing is Crucial to Preserve Data Value

According to Confluent's report,

May Your Real-Time Solution Drive Real Value for Your Business

And if you need a trusted partner to help you tame milliseconds, ScienceSoft is ready to shoulder the challenge.