Best Software to Build a Data Warehouse in the Cloud

Features, Benefits, Costs

Since 2005, ScienceSoft has been rendering data warehouse consulting services to help our clients build flexible centralized storages and enable data-driven decision-making.

Management Summary

| Best for | Description | |

|---|---|---|

|

|

Best for

|

Description

Azure Synapse Analytics is good for integrating data from hundreds of data sources across the company’s divisions, subsidiaries, etc. for analytical querying to be performed in seconds. Reporting on all management levels, from C-suite to directors, managers and supervisors, is protected with a fine-grained data access control. |

|

|

Best for

Big data warehousing |

Description

Amazon Redshift enables SQL-querying of exabytes of structured, semi-structured, and unstructured data across the data warehouse, operational data stores, and a data lake with the possibility to further aggregate data with big data analytics and ML services. |

|

|

Best for

Cost-effective storage of big volume of data with infrequent queries |

Description

BigQuery allows for cost-effective exabyte-scale storage with tables having up to 10,000 columns. It's most effective when main analytical queries either filter data according to partitioning or clustering or require scanning the entire dataset. |

|

|

Best for

Midsize data warehouse |

Description

Azure SQL database is a good fit for data warehousing scenarios with up to 8 TB of data volumes and a large number of active users (concurrent requests can reach up to 6,400 with up to 30,000 concurrent sessions). |

|

|

Best for

Cloud-agnostic data warehouse |

Description

Provided as Software-as-a-Service, Snowflake enables companies to concurrently allocate compute resources of different cloud vendors (AWS, Azure, GCP) to the same database for loading and querying data with no impact on the data warehouse performance. |

|

|

Best for

Operational data warehouse (hybrid transaction/analytical processing) |

Description

Azure Cosmos DB and Azure Synapse Analytics enable enterprise teams to run fast, cost-effective no-ETL queries on large operational real-time data sets, without copying data and impacting the performance of the company’s transactional workloads. |

Cloud Data Warehouse: The Essence

A cloud data warehouse is a type of data warehouse that uses the space and compute power allocated by a cloud provider to integrate and store data from disparate data sources for analytical querying and reporting.

Cloud vs. On-premises data warehouse

|

|

On-premises data warehouses |

Cloud data warehouses |

|---|---|---|

|

Scalability

|

Scalability depends on the in-house infrastructure and may be time- and resource-consuming due to reconfiguring/purchasing hardware, software, etc. |

Instant up- or down-scaling. |

|

Availability

|

Depends on the quality of available hardware, software and the competence of an in-house IT team. |

Up to 99.99% of uptime with leading cloud providers (Amazon, Microsoft, Google). |

|

Security

|

Depends on the competence of an in-house IT team. |

A cloud provider ensures infrastructure and data safety. |

|

Performance

|

If the scalability challenge is solved, show excellent query performance (measured in milliseconds). |

Serve multiple geographic locations and show great query performance (measured in seconds). |

|

Cost-effectiveness

|

The solution requires significant initial investments (hardware, IT team, training, etc.) |

No hardware-related costs. Possibility to pay only for used storage and computing resources (the pay-as-you-go model). |

Cloud Data Warehouse Key Features

Data integration and management

- Data integration with ETL/ELT processes.

- Flexible SQL querying of data.

- Ingestion of all data types (structured, semi-structured, unstructured).

- Big data ingestion.

- Streaming data ingestion.

- Full and incremental data extraction/load.

- Data transformation of varying complexity (data type conversion, summarization, etc.).

Data storage

- Subject-oriented data storage.

- Storage of time-variant (from the historical point of view) data.

- Read-only data storage.

- Integrated data storage (data is consolidated from disparate data sources).

- Metadata storage.

- Optimized data storage (columnar data storage, data compression, etc.).

Data warehouse performance

- Elastic on-demand scaling of storage and compute resources.

- Massively parallel processing database.

- Materialized view support (reusing pre-computed results of analytical queries).

- Result caching.

- Performance and concurrency dynamic management (predicting and prioritizing queries with ML).

- Fault tolerance.

Data warehouse management

- Automated infrastructure provisioning.

- Automatic data backup.

- Pre-built data source integrations.

Cloud Data Warehouse – Important Software Integrations for Reduced Costs and Time to Value

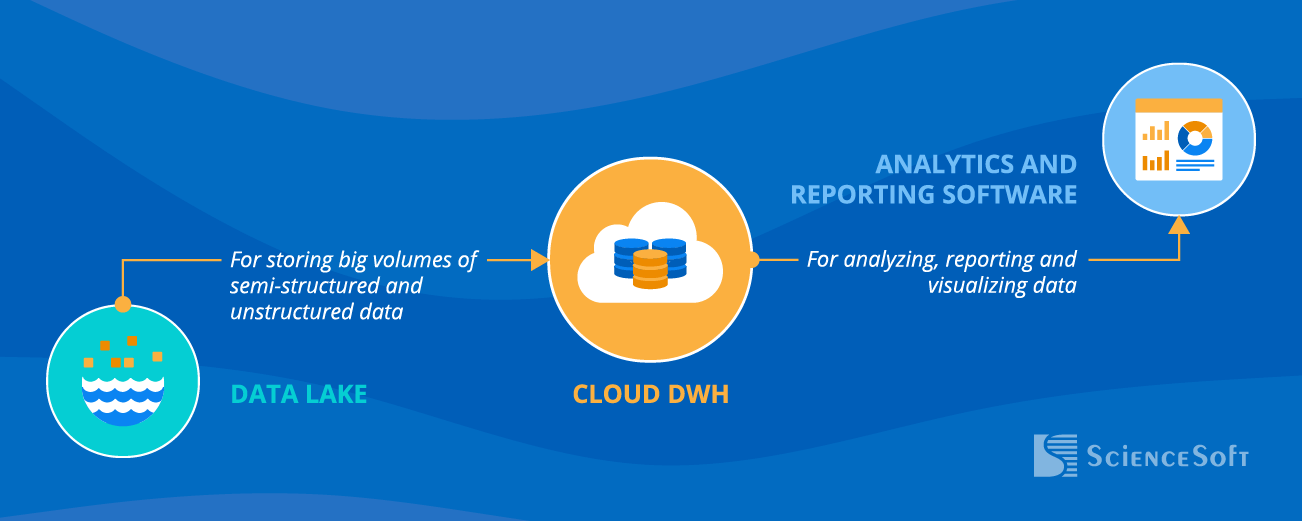

Data lake

A data lake stores big volumes of structured, semi-structured and unstructured data rarely accessed for analytical querying. Additionally, it can receive highly structured data from the data warehouse to be processed with other services (big data systems, ML systems, etc.).

Analytics and reporting software

Highly structured data from the data warehouse goes further to be analyzed, feed ML workloads, and be visualized to end-users.

How to Determine Cloud Data Warehouse Success

Meet data security and protection requirements

Choose a cloud vendor compliant with the needed regulatory requirements (for example, a cloud vendor that offers HIPAA-eligible data warehouse platforms).

To eliminate the risk of data leakage and prevent unauthorized data access, your cloud data warehouse solution should have the following capabilities:

- User authentication/authorization.

- Data access controls.

- End-to-end data encryption.

- Dynamic data masking, etc.

Choose data warehouse software with vast integration capabilities

A cloud data warehouse platform should have SDKs in common programming languages and support out-of-the-box integration with the required data sources.

Select an optimal pricing model for your data warehouse workloads

To avoid billing overage, closely monitor the cloud usage and consider various scenarios for cost savings suggested by cloud vendors, such as separate scaling of storage and compute resources, savings with reserved infrastructure commitments, billing alerts, data warehouse pausing, the pay-as-you-go model, etc.

Cloud Data Warehouse Benefits

TCO savings

Cloud data warehouse does not require purchasing and maintaining expensive hardware; it scales cost-effectively, minimizing the risk of infrastructure overprovisioning.

Decreased development costs

Decreased IT staff time due to data warehouse automation – automatic up- and down-scaling of storage and compute resources, data management tasks (data collection, aggregating, modeling).

Fast time to insight

Instant scalability, flexibility and reliability of the cloud enables data warehouse enhanced performance and availability, which results in accelerated business intelligence and, thus, faster business decisions.

Top 6 Cloud Data Warehouse Solutions

ScienceSoft's cloud data warehouse consultants have drawn up the list of 6 cloud data warehouse platforms that cover 99.9% use cases in data warehousing. The list outlines key distinctions and hidden limitations of the platforms and provides handy tips for their use.

After the list, you will find a selection tool that can help you select the best-fitting data warehouse platform for your case.

Azure Synapse Analytics

Best for: enterprise data warehousing

Azure Synapse Analytics is good for integrating data from hundreds of data sources across the company’s divisions, subsidiaries, etc. for analytical querying to be performed in seconds. Reporting on all management levels, from C-suite to directors, managers and supervisors, is protected with a fine-grained data access control.

Description

- Pre-built integrations for ingesting data from 90+ data sources.

- Loading and cleaning data from any source with Azure Data Factory.

- Storing vast amounts of data (a maximum of 240 TB for rowstore tables and unlimited storage for columnstore tables).

- Workload classification and isolation, flexible indexing options (clustered columnstore, clustered and nonclustered indexes), materialized view support, result set caching, etc. for optimized complex query performance.

- Integration with Azure Machine Learning for building ML models and generating predictions within the data warehouse.

- Compatibility with Python, R, .NET, T-SQL, Scala, Spark SQL for fulfilling various analytics tasks.

- Granular permissions on schemas, tables, views, individual columns, procedures, and other objects.

- Separate billing of compute and storage for cost savings for different data volumes and query load.

Market recognition:

- Leader in the 2025 Magic Quadrant for Cloud Database Management Systems.

- Companies using Azure Synapse Analytics: Walgreens Company, Unilever, The Co-operative Group, Marks and Spencer Group plc, Newell Brands.

Pricing

Compute:

- On-demand pricing: $1.20/hour (DW100c) - $360/hour (DW30000c).

- Reserved instance pricing can save up to 65% over the on-demand option (in a 3-year term).

Data storage: $23/TB/month ($0.04/TB/hour).

Note: No charge for the amount of data processed.

ScienceSoft's tip

Azure Synapse Analytics performance gains are usually explicitly realized when the data warehouse is more than 1 TB and has billion-raw tables. Otherwise, using Azure SQL Database may be reasonable.

Amazon Redshift

Best for: big data warehousing

Amazon Redshift enables SQL-querying of exabytes of structured, semi-structured, and unstructured data across the data warehouse, operational data stores, and a data lake with the possibility to further aggregate data with big data analytics and ML services.

Description

- Running analytic queries against terabytes to petabytes (up to 16 petabytes of data on a cluster) of structured and semi-structured data.

- Querying exabytes of structured, semi-structured and unstructured data from a data lake (Amazon S3) for analyzing without loading and transformation.

- Querying data from operational and relational databases on AWS with the federated query possibility.

- Processing big data with Hadoop/Spark using pre-built integration with Amazon EMR.

- Creating and training ML models with SQL on the data in Amazon Redshift with Amazon ML.

- Accommodating big data workloads with the Advanced Query Accelerator, result caching, materialized views and ML-based workload management.

- Possibility to pay separately for compute and managed storage (RA3 node type).

Market recognition:

- More than 6,500 deployments.

- Companies using Amazon Redshift: Pfizer, McDonald’s, FOX Corporation, Amazon.com, Intuit, Yelp, Western Digital.

Pricing

- On-demand pricing: $0.25 - $13.04/hour.

- Reserved instance pricing offers saving up to 75% over the on-demand option (a 3-year term).

- Data storage (RA3 node type): $0.024/GB/month.

Note: No charge for the amount of data processed.

ScienceSoft's tip

To load streaming data (sensor data, other real-time data), use Amazon Kinesis to capture and transform streaming data and load it into the S3 data lake. Then load the data to Redshift by chunks with the COPY command.

Google BigQuery

Best for: cost-effective storage of big volume of data with infrequent queries

BigQuery allows for cost-effective exabyte-scale storage with tables having up to 10,000 columns. It's most effective when main analytical queries either filter data according to partitioning or clustering or require scanning the entire dataset.

Description

- More than 100 pre-built data source connectors.

- Automated loading and transforming of data with Data Transfer Service (DTS).

- Querying data across object storage (Cloud Storage), transactional databases (Bigtable, Cloud SQL), spreadsheets in Drive without data movement with the federated queries support.

- Integration with the Apache big data ecosystem with Dataproc and Dataflow to read and write data directly from Big Query using the Storage API.

- Streaming data ingestion and analysis with BigQuery’s streaming insertion API, Datastream, Pub/Sub and Dataflow.

- Querying of data across clouds (AWS and Azure) with BigQuery Omni (Preview).

- Subsecond query response time with column-based storage, materialized views, cached query results, etc.

- Optimized data storage costs with the possibility to configure the default table expirations for databases and tables, partition expiration for partitioned tables, long-term storage, etc.

- Training and executing ML models on structured data with SQL using BigQuery ML, Vertex AI and TensorFlow.

- Support for T-SQL, Java, Python, C#, Go, Node.js, PHP, and Ruby.

- Simpler (compared to Amazon Redshift and Azure Synapse Analytics) database administration required due to automated management of CPU and data compressions, data encryption turn-on by default, etc.

- Granular permissions on datasets, tables, and views.

Market recognition:

- Leader in the 2025 Magic Quadrant for Cloud Database Management Systems.

- More than 3,000 companies are using Google BigQuery, including: United Parcel Service (UPS), Etsy Inc, Procter & Gamble Company, Tokopedia, Sky Group, American Eagle Outfitters.

Pricing

- Storage: $0.02– $0.04/GiB/month ($0.01– $0.02/GiB/month for long-term storage – a table/table partition not modified for 90 consecutive days). Note: the first 10 GiB is free each month.

- Compute pricing:

On-demand: $6.25 per TiB. The first 1 TiB is free each month.

Capacity pricing: $0.04–$0.1 per virtual CPU.

Capacity pricing for 1 and 3 year reservations: $0.032-$0.08 per CPU.

ScienceSoft's tip

BigQuery doesn’t provide any indexes, and many analytical queries scan the entire database. And as BigQuery charges separately per query based on the amount of data processed, consider setting up custom cost controls to limit the amount of query data processes per day.

Azure SQL Database

Best for: midsize data warehouse

Azure SQL database is a good fit for data warehousing scenarios with up to 8 TB of data volumes and a large number of active users (concurrent requests can reach up to 6,400 with up to 30,000 concurrent sessions).

Description

- Three deployment options:

- Single database - a fully managed isolated database with a guaranteed amount of compute, memory, and storage resources

- Elastic pool – a collection of single databases with a shared pool of resources.

- Managed instance – a fully managed instance of SQL Server (good for lift-and-shift migration of an on-premise SQL Server data warehouse)

- Accommodating various workloads with three service tiers: General-purpose (8 TB database storage for budget-oriented workloads), Business-critical (4 TB database storage for low-latency workloads with high resiliency to failures and fast failover), and Hyperscale (up to 100 TB of database storage)

- Dynamic scalability and autoscale features.

- Automatic index management and plan correction (problematic SQL plan performance identification and fixing).

- Intelligent Insights for monitoring database performance and alerting on performance degradation issues and getting performance improvement recommendations.

- Support for cross-database SQL queries.

- Support for .NET, Java, Node.js, Ruby, PHP, and Python.

- Always-on data encryption.

Market recognition:

- Leader in the 2025 Magic Quadrant for Cloud Database Management Systems.

- Companies using Azure SQL Database: Icertis, Paychex Inc, Axpo Holding AG, ClearSale, Schneider Electric SE, Thomson Reuters Elite.

Pricing

- General-purpose: provisioned compute – $0.505-$32.280/hour (Gen 5), locally redundant storage – $0.115/GB/month.

- Business-critical: provisioned compute - $1.359-$86.968/hour (Gen 5), storage – $0.25/GB/month.

- Hyperscale: provisioned compute – $0.366-$23.381/hour (Gen 5), storage – $0.25/GB/month.

ScienceSoft's tip

Azure SQL database provides inbuilt backup, which can be configured for longer-term retention for compliance and auditing reasons.

Azure Cosmos DB + Azure Synapse Analytics

Best for: operational data warehouse (hybrid transaction/analytical processing)

Azure Cosmos DB and Azure Synapse Analytics enable enterprise teams to run fast, cost-effective no-ETL queries on large operational real-time data sets, without copying data and impacting the performance of the company’s transactional workloads.

Description

- Storing petabytes of operational data in a column-oriented analytical store separately from an indexed row-based transactional store in Azure Cosmos DB for analytical querying.

- Automatic sync of inserts, updates, deletes to Cosmos DB operational data from transactional store to analytical store in near real time.

- Replicating data across regions configured within a Cosmos account and maintaining 4 replicas of data within a region for high availability and disaster recovery.

- Control of the retention of operational data in the analytical store at the container level with the Analytical Time-to-Live capability.

- Running near real-time large-scale no-ETL analytics on operational data in Azure Cosmos DB analytical store using Azure Synapse Link.

- Flexible indexing options (primary and secondary indexes) for executing complex analytics queries on operational data.

- Granular permissions on schemas, tables, views, individual columns, procedures, and other objects.

- Azure ML integration to build Machine Learning (ML) models in Azure Synapse Analytics for generating insights over the operational data.

- Data encryption with customer-managed keys.

Market recognition:

- Cosmos DB is a leading big data NoSQL database used by The Coca-Cola Company, NortonLifeLock Inc., Mercedes-Benz, ExxonMobil, ASOS, and Olympus Corporation.

- Azure Synapse Analytics leads the 2025 Magic Quadrant for Cloud Database Management Systems.

Pricing

Azure Cosmos DB analytical store:

- Storage - $0.25/GB/month

- Write Operations (per 10,000 operations) - $0.05

- Read Operations (per 10,000 operations) - $0.005

Azure Synapse Analytics:

Compute:

- On-demand pricing: $1.20/hour (DW100c) - $360/hour (DW30000c).

- Reserved instance pricing can save up to 65% over the on-demand option (in a 3-year term).

- Data storage: $23/TB/month.

Azure Synapse Link pricing includes the costs incurred by using the Azure Cosmos DB analytical store and the Synapse runtime.

ScienceSoft's tip

When planning your backup policy, keep in mind that the Cosmos DB analytical store doesn’t support automatic backup and restore. To restore data in the analytical store, you will need to properly configure copying data from an automatically restored Cosmos DB account to the primary Cosmos DB account.

Snowflake

Best for: cloud-agnostic data warehouse

Provided as Software-as-a-Service, Snowflake enables companies to concurrently allocate compute resources of different cloud vendors (AWS, Azure, GCP) to the same database for loading and querying data with no impact on the data warehouse performance.

Description

- Hosting a Snowflake account (storage, compute, and cloud services) on Amazon Web Services (AWS), Microsoft Azure (Azure), and Google Cloud Platform (GCP).

- Separate scaling of storage and compute resources using a storage service for persistent storage of data and virtual warehouses (an MPP compute cluster composed of multiple compute nodes allocated by Snowflake from a cloud provider) for instant query processing (processing 6 to 60 million rows of data in from 2 seconds to 10 seconds).

- Support for bulk and continuous data loading from Snowflake file stages, Amazon S3, Microsoft Azure Blob Storage, and Google Cloud Storage, regardless of the cloud platform for the storage account.

- Support for multiple service connections:

- a web-based user interface and command-line clients (e.g. SnowSQL) for managing and using Snowflake.

- ODBC, JDBC, .NET, PHP, and Node.js. drivers for connecting apps (e.g., Tableau).

- native connectors (e.g., Python, Spark) for app development

- third-party connectors for ETL tools (e.g., Informatica), and BI tools (e.g., Power BI, ThoughtSpot).

- Automated database maintenance features: built-in performance optimization, automatic clustering, materialized view maintenance, end-to-end automatic data encryption, etc.

- Avoiding accidental resource spend with the auto-pause capability.

Market recognition:

- Snowflake is a leader in the 2025 Magic Quadrant for Cloud Database Management Systems.

- Companies using Snowflake include Capital One Financial Corporation. McKesson Corporation, Harmoney Corp Limited, etc.

Pricing

On-demand and pre-purchase pricing, separate billing of storage and compute, compute billing on a per-second basis (minimum 60 seconds), etc.

- Snowflake On Demand – usage-based, per-second pricing with no long-term commitment.

- Snowflake capacity storage – pre-purchased compute usage on a per-second basis, with a minimum of 60 seconds, auto-suspend, and auto-resume capabilities.

Pricing is available by direct request to Snowflake.

ScienceSoft's tip

Snowflake’s security and compliance options vary in accordance with specific editions. Standard and Enterprise editions provide such essential security capabilities as automatic data encryption, object-level access control, multi-factor authentication, etc. Business Critical and VPS (Virtual Private Snowflake) provide more advanced data security capabilities, including customer-managed encryption keys, support for PHI data, PCI DSS compliance, etc.

Choose the Right Cloud DWH with Expert Help

Fill in the form below and our consultant will suggest an optimal cloud data warehouse software for your specific case.

Thank you for your request!

We will analyze your case and get back to you within a business day to share a ballpark estimate.

In the meantime, would you like to learn more about ScienceSoft?

- Project success no matter what: learn how we make good on our mission.

- Experience in data management and analytics since 1989: check what we do.

- 4,200+ successful projects: explore our portfolio.

- 1,400+ incredible clients: read what they say.

Short but Sufficient Comparison of Top 3 Cloud Data Warehouses

Implementation of a Cloud Data Warehouse

Since 2005, ScienceSoft renders data warehouse consulting and development services to help our clients build a flexible centralized storage on a fitting cloud platform and enable analytics capabilities to optimize internal business processes and enhance decision-making. With project management practices polished since 1989, we drive projects to their goals regardless of time and budget constraints.

Cloud data warehouse consulting

Our team:

- Analyzes your business needs and elicits requirements for a future cloud data warehouse solution.

- Designs cloud data warehouse architecture.

- Outlines the optimal cloud data warehouse platform and its configurations.

- Advises on data governance procedures.

- Designs a cloud data warehouse implementation/migration strategy.

- Conducts admin trainings.

- Delivers PoC for complex projects.

Cloud data warehouse implementation

Our team:

- Analyzes your business needs and defines the required cloud data warehouse configurations.

- Delivers PoC for complex projects.

- Does data modeling and sets up ETL/ELT pipelines.

- Develops and integrates a cloud data warehouse into the existing data ecosystem.

- Runs QA.

- Provides user training and support, if required.

About ScienceSoft

ScienceSoft is a global IT consulting and IT service company headquartered in McKinney, TX, US. Since 2005, we assist our clients in delivering data warehouse solutions with the help of end-to-end data warehousing services to encourage agile and data-driven decision-making. Our long-standing partnerships with global technology vendors such as Microsoft, AWS, Oracle, etc. allow us to bring tailored end-to-end cloud data warehousing solutions to business users. Being ISO 27001-certified, we guarantee cooperation with us does not pose any risks to our clients' data security.